Research

Data Science at dv01: Data Pipelines and the Spark Query Plan as a Source of Data

12 August 2020

Introduction

In a modern data-centric business, one of the main challenges is collecting heterogenous data from different sources and converting them into a unified schema so that the data can be easily analyzed. dv01 is a market leader in data management and aggregation in the debt capital markets. Messy, disparate data is a critical issue for dv01 and in practice, a large amount of domain knowledge, manual effort, and time are required to integrate these different data sources. Data science and machine learning have a huge potential in helping automate repetitive tasks such as schema matching and data standardization, which will help streamline the workflows for data engineers and analysts.

Modern machine learning algorithms, however, require a sizable amount of structured and labelled data. Domain experts at dv01 have manually cleaned hundreds of different datasets in order to build out the data pipeline that exists today. Due to the diverse and segmented nature of the business, most of this information is embedded in individual processes, often in the form of code. It would be a daunting task to have the domain experts revisit the process or to have the data engineers refactor the code.

In this article, we will show that because we use Apache Spark as the main computing engine for data pipeline processing, we are able to extract information from key steps in the data pipeline in a systematic way without altering the existing data pipeline processes. We will also show that the extracted knowledge, in turn, can be used to improve internal processes.

Data Ingestion and Integration

In the last decade or so, data has become an essential and integral part of most businesses. As most parts of business processes become digitized, more and more data is available. However, one of the main challenges faced by many companies is in finding coherent ways to analyze vast amounts of data from many different sources.

One strategy for achieving the goal for data integration is to transform the data into a single data schema. The common schema is constructed with a lot of domain knowledge to make sure it captures a majority of the information in the original data. A single unified schema allows users to easily analyze, compare, and aggregate the data from all sources together.

Because data sources are generated by several organizations, they can have different schemas (i.e. column names, column types, values) and column definitions. In order to fit the data from these sources into a common schema, a significant number of transformations are needed in the process: mapping columns from one name to another, converting the values of a column into another set of values, combining several fields into one field in the common data schema, and calculating missing columns from existing columns. The process that transforms dataset(s) from one data source to a dataset represented in the common data schema is called a data pipeline. Domain knowledge and deep understanding of the source and unified schemas are embedded into the pipeline code by data analysts and engineers.

Data pipelines need to balance two competing factors. The first is to ensure that the design of the standardizing and normalizing process is a clean and unified one. The other factor is that data pipelines need to accommodate the complexities that exist between different data sources, the urgent timeline for business needs, and the evolving requirements the data pipelines need to satisfy over time. dv01 constructs its data pipelines by grouping data from similar sources while giving the flexibility to data analysts and data engineers to create separate pipelines for different classes of data sources and workflows. One undesirable consequence of this design to data scientists is that the domain knowledge that is embedded into the pipeline code is extremely non-uniform and unstructured.

The Need for Structured Data for Data Pipeline Automation

In order to automate data cleansing and transformation, we need a structured representation of the previous work performed by domain experts. There are many steps to transform and convert raw data to a common data schema, and they require human intervention and expertise. Analysts and engineers need to thoroughly understand external file specs and our schema, as well as financial terms and formulas in order to properly standardize the data.

On the other hand, most steps of the tasks described above can be fairly mechanical once one has become familiar with the processes. In many cases, the difference between equivalent column names could be a simple permutation, a synonym, some shorthand spelling, or even misspelling of a column in another data source. If these relatively simple tasks could be handled by a computer automatically, a lot of repetitive work would be eliminated.

With the development of modern data science and machine learning algorithms, problems with non-numerical fields or high ordinance variables such as natural language contents can be handled. However, machine learning algorithms do not have any knowledge of the problem to begin with. In order for machine learning algorithms to be applied to a specific problem, we first need to have a sizable amount of data that has already been processed and mapped by human experts, called labeled data. The labeled data is then used to “train” the algorithm, so that it can “learn” how to solve the problem going forward. Large amounts of labeled data is an essential building block for useful data science and machine learning algorithms.

dv01 has been processing financial data for years. A lot of human intelligence and intellectual capital has been built into the data pipeline processes and code. As mentioned previously, the pipeline structures and code are built in a heterogenous way, which means the labeled data exists in our codebase in a highly unstructured way. In order to use the labeled data for machine learning, it needs to be in a structured format. Many data pipeline designs make it difficult to extract labeled data without a significant or complete rewrite of the codebase. However, dv01 has designed an innovative methodology that allows for the extraction of labeled data from a data pipeline framework built on Apache Spark platform, without the need to modify the existing data pipeline code base.

Spark as a Data Pipeline Engine

The dv01 data pipeline runs on Apache Spark, an open-source, in-memory computing framework that is built with distributed cloud computing and parallelism architecture from the ground up. It is a cutting-edge and ideal platform for a data-driven business to build data pipelines on. There are other advantages that Spark has over other choices: a wide range of cloud ecosystems, rich functional programming models, and extensive libraries for many data related tasks.

Extracting Spark Query Plan as a Source of Labeled and Structured Data for Data Science

Spark utilizes lazy evaluation, meaning no operation is actually performed to the data until required or explicitly instructed. Spark compiles all of the lazily evaluated actions into a query plan which is then optimized. Query plans are a great way to understand how your code is actually being executed by Spark, in case of any bottlenecks. As we discovered at dv01, it can also be a great source of data about your pipeline. Since our entire pipeline is lazily evaluated (aside from a few exceptions), all of the data transformations performed in the pipeline have to exist in a semi-structured way in the Spark query plan.

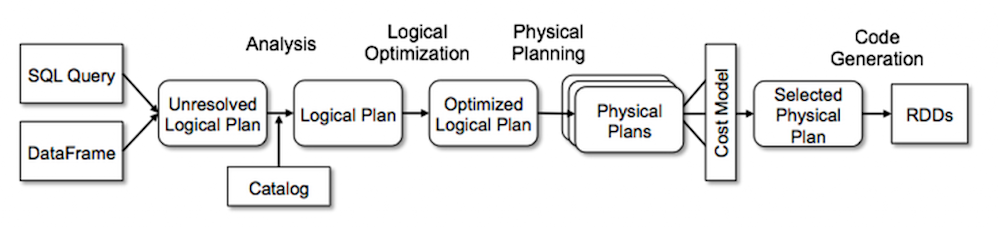

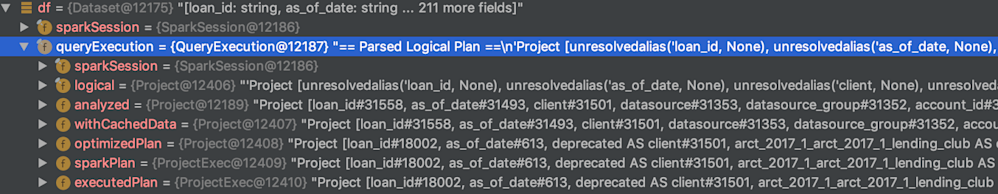

Under the hood, Spark optimizes the operations in three phases: a logical plan, an optimized plan, and a physical plan. Since the logical plan is the closest to the raw operations being written out in code and is produced in a deterministic way, this step made the most sense to extract for our purposes of understanding the pipeline.

All of the plans are available via the queryExecution attribute of a Spark DataFrame. Each plan exists in a nested structure of operations and each operation is an array of expressions that represent the actions being performed to a series of columns. Traversing this structure, one can pull out all of the operations performed on the data in a structured format. From this data, we can extract the raw columns used to calculate each field in the unified schema and the calculations applied to each of the raw columns. In essence, we are able to see all of our data pipelines - how all of our schemas map to our unified schema - simply by digging into Spark. This treasure trove of information about our pipeline allows us to pursue a variety of projects, such as automated schema mapping, value standardization, code refactoring, and improved testing.

Use Cases for the Extracted Structured Data

Automated Schema Mapping

From the Spark query plan, we have collected thousands of mapped field name pairs from our pipelines, cleaning and storing them in a structured table. Now, with our labeled data, we have built a machine learning algorithm that scores the likelihood of a column being mapped to a dv01 schema field given the name of the column and other information. Different teams within the company have used this algorithm as part of their workflow with great success. Additionally, we have been able to utilize this capability to improve our new Tape Cracker application, so that clients can leverage the information from our pipeline to automate much of their data cleaning.

Automated Value Mapping or Value Standardization

Another challenge that we often face in data ingestion and integration is to convert one set of enumerated values for a field into another set of values for the same field. For example, let us assume that we have a field that represents the state where an asset (i.e. house or condo) is located. In our data sources, the field can be represented by the full name of the state (New York), can be all capitals (NEW YORK), lack a space (New_York), or just the state abbreviation (NY). In rare cases, the state can be represented by predefined numbers that map back to a code. However, all data sources need to be converted into the same final schema, where the states are represented by two letter abbreviations. For each data source, we need to establish a map between the original representation of the states to the two letter abbreviation format we are using in the final schema.

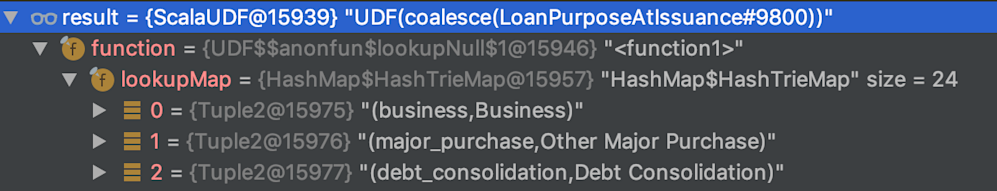

Luckily, these mappings can also be extracted from the Spark query plan, as seen in the above image. In our data pipeline codebase, all the value mappings are done through a predefined map in Scala via a specific set of functions. This makes it possible to locate and identify all the value mapping actions in the execution plan no matter where the data engineers decide to apply these value mapping actions. By simply collecting and cleaning all the previously available value mapping actions and applying a fuzzy matching algorithm, we can quickly identify and suggest value mapping actions for many enum fields once we have seen enough different data sources. This methodology is especially effective since for most enum fields, there are a limited number of industry conventions, especially among the most common fields.

Improve Existing Pipelines by Utilizing the More Organized and Structured Information

As stated above, because of the specific formats and structures of every data source and the coding style and knowledge of the analysts and engineers who worked on cleaning a dataset, the process for each data pipeline can differ significantly. Even though each pipeline might have the same basic elements, the actual code can be very different. Engineers in any industry hope to generalize their code as much as possible, so this non-uniformity in performing similar tasks in a codebase is not desirable.

With a structured version of our data pipeline , we are able to identify similar or duplicate pipelines which rely on different pieces of code. This unnecessary complexity generally happens because the pipelines are implemented by different engineers without realizing the close relationships between the data. By comparing a few key steps, we can quickly identify these pipelines are very much the same.

Monitoring Meaningful Data Pipeline Changes for Testing and Quality Control

A major problem engineers at dv01 face is knowing which pipelines outside of the one they are working on are affected by their code changes, since pipelines often inherit from the same code. To thoroughly test code changes against production would require running every pipeline and looking for changes in the resulting data which is very time consuming and expensive from a compute standpoint.

Since Spark query plans are a deterministic representation of the operations performed by each pipeline, they can be used to determine the pipelines that are affected by a code change. A simple comparison of the difference in plans generated by Spark between the production and development runs highlights changes in any affected pipelines. Compared to the full pipeline, the process also takes a fraction of the time and computing power to generate, saving both time and money.

Caveats

One issue with using the Spark query plan is that UDFs (user defined functions) are not compiled by Spark into expressions and are a black box. UDFs allow programmers to extend the functionality of Spark using Scala syntax. Operations performed by these UDFs are hidden, though. Additionally, if a pipeline evaluates its plan midway through with a collect, any operations before that collect will be lost in the query plan.

Conclusion

Consolidating and utilizing the wealth of information dv01 has accrued over time is one of the most important jobs of the Data Science team. dv01’s advantage in the marketplace is the human expertise we have. Harvesting the Spark query plan has proven to be a very effective way to streamline and build upon that expertise.

- Authored by Ethan Zornow, Wei Wu, Kevin Hsu

Receive the Latest Insights

Subscribe to our mailing list to stay up-to-date with the latest market insights and product updates.

Subscribe to Newsletter